The demand for large-scale AI is growing fast—models are getting bigger, inference workloads are increasing, and traditional infrastructure often falls short. Most teams today juggle multiple tools to train, host, and deploy models, which slows down experimentation and increases costs.

Hedra AI addresses this gap by offering a unified infrastructure layer purpose-built for AI workloads. Whether you’re training a foundation model or deploying a custom LLM, Hedra helps you scale efficiently without the complexity of managing clusters, containers, or cloud resources manually.

By rethinking how AI infrastructure should work—simple, fast, and developer-first—Hedra enables teams to focus on building smarter products, not fighting infrastructure limitations.

🚨 Why This Blog Matters

AI infrastructure is reaching a breaking point as models grow larger and more complex. Hedra AI solves this by offering a unified, scalable, and developer-friendly platform for managing training and deployment—without the typical operational friction.

🧠 What You’ll Learn Here

This blog covers how Hedra AI supports LLM training, inference optimization, multi-cloud management, and seamless model deployment. You’ll see why it’s built for real-world AI demands—fast, flexible, and powerful.

🎯 Who Should Read This

Ideal for ML teams, AI startups, enterprises, and research labs that want to speed up model development, simplify operations, and stay cloud-agnostic.

What is Hedra AI?

Hedra AI is a modern infrastructure platform designed to simplify how developers and machine learning teams build, scale, and deploy AI models.

At its core, Hedra helps you manage high-performance compute without the usual hassle of managing Kubernetes, Docker, or multi-cloud environments. Whether you’re fine-tuning an LLM or running GPU-heavy inference, Hedra abstracts the complexity, so teams can move faster with less overhead.

Who is it for?

- ML engineers building and scaling custom models

- AI startups deploying GenAI apps

- Enterprises managing model infrastructure across teams

Key Benefits:

- Scalable AI infrastructure without manual setup

- One-click deployment for training and inference

- Centralized control over compute, models, and teams

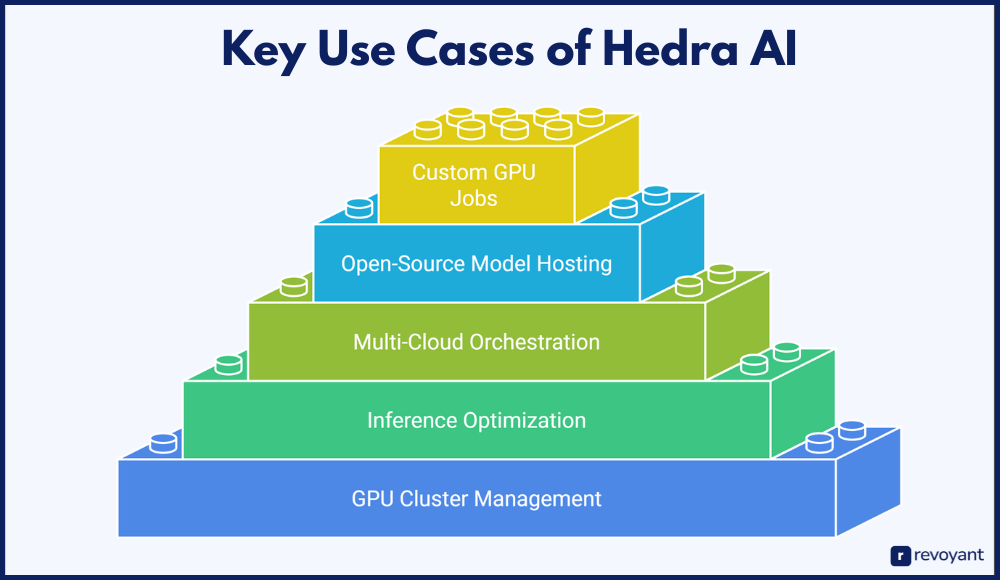

Key Use Cases of Hedra AI

Hedra AI is designed for teams working on demanding AI and ML workloads—whether you’re experimenting with foundation models, deploying production-level inference, or managing compute at scale. Here’s how organizations are using Hedra:

1. Training Large Language Models (LLMs) at Scale

Training models like LLaMA, Falcon, or Mistral requires massive GPU clusters and efficient orchestration. Hedra simplifies this by:

- Automating the provisioning of distributed GPU resources

- Supporting checkpointing and rollback for long-running jobs

- Allowing teams to monitor and manage experiments from a single dashboard

- Reducing cloud waste with intelligent auto-scaling and job routing

Why it matters: Most ML teams struggle to scale LLM training without DevOps overhead. Hedra helps them move faster with better resource control and cost efficiency.

2. Optimizing Inference Workloads for Production

Inference can quickly become a bottleneck as model size grows and user traffic fluctuates. Hedra solves this by:

- Deploying GPU-accelerated, auto-scaling inference endpoints

- Offering low-latency APIs for real-time AI applications

- Supporting A/B testing and multi-version deployments

- Monitoring latency, throughput, and GPU usage in real-time

Why it matters: For SaaS AI platforms and internal tools, fast inference is key. Hedra ensures performance without overprovisioning.

3. Managing Multi-Cloud and Hybrid AI Infrastructure

Juggling between AWS, Azure, GCP, and on-prem can be complex. Hedra makes it seamless:

- Unified orchestration layer across multiple clouds

- Smart workload placement for cost-performance optimization

- Avoid vendor lock-in with flexible deployment targets

- Centralized billing and usage reporting across environments

Why it matters: Enterprises and global teams often work across regions and clouds. Hedra offers true flexibility and control.

4. Hosting and Serving Open-Source Foundation Models

Open-source models like LLaMA 2, MPT, and Mistral are powerful—but deployment can be tricky. Hedra handles it:

- Pre-configured environments for popular open models

- Instant model upload or pull from Hugging Face

- Production-ready serving with built-in autoscaling

- Endpoint security, monitoring, and throttling included

Why it matters: Developers can skip infra setup and go live in hours instead of days.

5. Running Distributed GPU Clusters for Custom Jobs

Whether you’re building a diffusion model or optimizing an agent framework, Hedra supports:

- Multi-node cluster orchestration with real-time monitoring

- Job queueing, prioritization, and scheduling

- Custom Docker environments or pre-built templates

- Resilience through health checks and auto-recovery

Why it matters: GPU-intensive workloads are expensive and fragile. Hedra optimizes usage while maintaining system reliability.

Core Features of Hedra AI

Hedra AI is purpose-built to simplify infrastructure management for machine learning teams. Its platform supports every stage of the ML lifecycle—from scaling compute to deploying production-ready models.

| Feature | What It Enables |

|---|---|

| Unified Compute Orchestration |

|

| Real-Time Monitoring and Telemetry |

|

| Model Lifecycle Management |

|

| Fast Model Deployment |

|

| Role-Based Access and Security |

|

| Integration with ML Ecosystem |

|

Unified Compute Orchestration

Hedra allows teams to run AI workloads across multi-cloud, hybrid, or on-prem environments without manual overhead.

What it enables:

- Launch and scale jobs across AWS, Azure, GCP, and bare metal

- Auto-scale resources based on workload demands

- Intelligent job routing for performance and cost optimization

Real-Time Monitoring and Telemetry

With built-in observability, Hedra delivers full visibility into compute usage and model performance.

What it enables:

- Monitor GPU/CPU usage by job or model

- View training and inference metrics in real time

- Export logs and metrics to external tools or dashboards

Model Lifecycle Management

Hedra supports versioning, rollback, and automation for every model you build or deploy.

What it enables:

- Version control for models and training configurations

- Rollback support for failed experiments

- Automatic checkpointing and recovery options

Fast Model Deployment

Deploy and serve models with GPU acceleration and high availability—using either CLI or UI.

What it enables:

- One-click deployment for LLMs and open-source models

- Scalable APIs for production integration

- Built-in load balancing and auto-scaling

Role-Based Access and Security

Built for teams, Hedra ensures secure access and regulatory compliance across environments.

What it enables:

- Granular user and project-level permissions

- Support for GDPR, HIPAA, and SOC 2 compliance

- Integration with Okta, Azure AD, and custom SSO

Integration with ML Ecosystem

Hedra connects easily with existing ML and DevOps stacks.

What it enables:

- Native support for PyTorch, TensorFlow, and Hugging Face

- SDKs and APIs for automation and scripting

- CI/CD integration for model updates and pipelines

How Hedra AI Stands Out

While many platforms offer compute and model deployment, Hedra AI differentiates itself through simplicity, scalability, and developer-centric design. Compared to traditional cloud providers or other AI infrastructure platforms, Hedra removes operational friction and speeds up experimentation, without locking you into one cloud.

| Feature | Hedra AI | AWS SageMaker | Replicate | Modal AI |

|---|---|---|---|---|

| Ease of Deployment | One-click deployment with CLI/UI | Requires configuration and scripting | Prebuilt templates, limited flexibility | Requires Docker image and YAML setup |

| Multi-Cloud Support | Native support across AWS, GCP, Azure, bare metal | Mostly AWS-only | Limited (mostly GPU-focused) | Hosted infra only |

| GPU Orchestration | Dynamic GPU scheduling and cluster scaling | Manual setup or limited auto-scaling | Fixed GPU environments | Requires custom scheduling setup |

| Model Versioning | Built-in version control and rollback | Available with manual setup | Limited | Limited |

| Monitoring & Telemetry | Real-time metrics, logs, and job tracking | Requires third-party integrations | Basic logs only | Limited to internal dashboards |

| Security & Compliance | Role-based access, HIPAA/GDPR-ready | Enterprise-level but complex to manage | Basic security | Not built for regulated industries |

| Pricing Transparency | Usage-based, clear tiers | Variable and complex | Pay-per-run (can get expensive at scale) | Pay-per-execution |

| Best For | ML teams, LLM training, hybrid cloud deployments | Enterprises with in-house DevOps | Indie builders and hobbyists | Fast prototyping with simple models |

Why Teams Choose Hedra

- Built for ML Workloads: Hedra AI is not a general cloud provider—it’s purpose-built for AI, making it easier to train, monitor, and deploy large-scale models.

- Developer-Centric Design: With CLI-first access, automation hooks, and no vendor lock-in, engineers can move fast and customize workflows.

- Scalable and Cost-Effective: Whether you’re training LLMs or hosting a production model, Hedra AIoffers auto-scaling GPU orchestration without hidden fees.

- No Cloud Lock-In: Avoid getting stuck on one cloud. Hedra AI allows flexible deployment across multiple environments.

Who Should Use Hedra AI?

Hedra AI supports a wide range of users—from lean startups to large enterprise teams—who need flexible, powerful AI infrastructure without the hassle of managing hardware.

🚀 Startups

Rapid iteration, open model hosting, and no DevOps burden. Ideal for fast-moving AI teams.

🏢 Enterprises

Secure GPU clusters with RBAC, compliance, and hybrid cloud support for in-house LLMs.

🔬 Research Teams

Flexible compute for collaborative experiments, reproducibility, and framework switching.

💼 Agencies

Client-ready environments, reusable templates, and workspace separation made easy.

AI Startups Building with Open Models

Startups often need to iterate fast without managing complex infrastructure. Hedra AI provides immediate access to powerful GPUs, supports open-source models like Llama and Mistral, and offers usage-based pricing.

Teams can quickly move from experimentation to production with minimal setup. The simplified interface ensures even lean teams can launch and scale AI apps with confidence.

Enterprises Training Internal LLMs

Large organizations developing proprietary language models need scalable, secure environments. Hedra AI allows them to spin up distributed GPU clusters, integrate with internal ML workflows, and meet compliance needs.

With support for custom pipelines and multi-cloud orchestration, enterprises can future-proof their AI stack. Dedicated SLAs and role-based access controls ensure secure collaboration at scale.

Research Labs and Academic Institutions

Researchers benefit from Hedra’s flexible environments and seamless compute access. It supports reproducibility, framework switching (like PyTorch or JAX), and simplified collaboration.

It’s particularly effective for grant-funded teams working across geographies or institutions. Shared environments and versioned experiments keep everyone aligned and productive.

AI Agencies and Consulting Firms

Consultants deploying AI for multiple clients can use Hedra AI to manage isolated environments, reuse templates, and roll out custom models without vendor constraints.

White-labeling options and workspace separation help manage multiple client accounts smoothly. Automated scaling helps agencies avoid infrastructure delays during critical delivery windows.

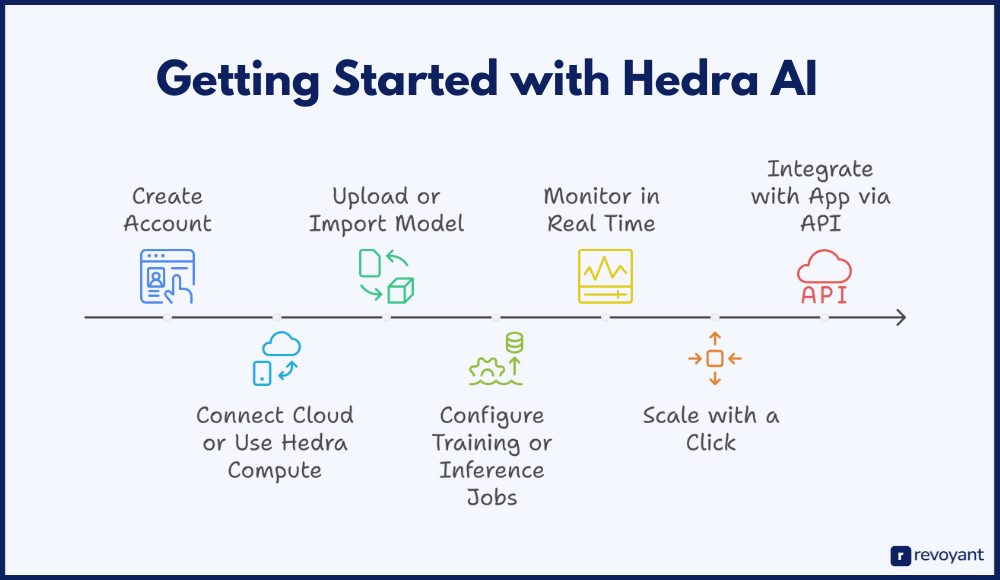

Getting Started with Hedra AI

Getting started with Hedra AI is designed to be simple—even for teams new to AI infrastructure. Whether you’re uploading your first model or scaling GPU workloads, the platform provides a streamlined experience from setup to deployment.

Step-by-Step Setup Guide

1. Create Your Account

Head over to hedra.com and sign up with your work email. You’ll get access to a secure dashboard where you can manage models, clusters, and teams in one place.

2. Connect Your Cloud or Use Hedra Compute

Choose your preferred setup—either bring your own cloud credentials (AWS, Azure, GCP) or use Hedra’s managed compute to get started without infrastructure overhead.

3. Upload or Import Your Model

You can upload pre-trained models directly or integrate with Hugging Face to import open-source models like LLaMA, Mistral, or Falcon.

4. Configure Training or Inference Jobs

Set up training jobs with custom parameters, or spin up inference endpoints for real-time applications. You can use the CLI, SDK, or dashboard depending on your workflow.

5. Monitor Everything in Real Time

Track GPU usage, cost estimates, model performance, and logs through Hedra’s built-in monitoring tools.

6. Scale with a Click

Need more power? You can scale clusters or endpoints up/down instantly, with intelligent auto-scaling rules to optimize usage and budget.

7. Integrate with Your App via API

Deploying models as a service is easy with Hedra’s REST APIs and SDKs—connect inference endpoints to your production stack without managing backend servers.

Automate Post-Production with AI

Post-production is often the silent time-killer in any content pipeline. From stitching raw footage and adjusting lighting to editing audio and writing descriptions—it’s where teams spend the most hours but see the least innovation.

Hedra AI changes that. By bringing intelligence and automation into post-production, it turns a manual, time-heavy process into a streamlined, near-instant workflow.

What You Can Do with Hedra AI:

1. Auto-Captioning with High Accuracy

Automatically generate clean, well-timed captions from audio or video content. Hedra uses advanced speech recognition to support multiple accents and languages, helping you publish faster while meeting accessibility standards.

2. Thumbnail Generation and Enhancement

Skip the guesswork of thumbnail creation. Hedra uses visual cues, scene dynamics, and engagement predictors to auto-generate thumbnails optimized for clicks—no graphic design tool needed.

3. Intelligent Highlight Detection

Using AI-driven summarization and scene analysis, Hedra identifies the most engaging moments in long-form videos. Easily repurpose content into short clips for social media, ads, or trailers without watching the full footage manually.

4. Scene Tagging and Content Categorization

Hedra applies natural language processing and computer vision to tag key topics, themes, objects, and speakers. This makes your content library easier to navigate, search, and reuse—especially at scale.

5. Metadata Generation at Scale

No need to write titles, descriptions, or SEO tags by hand. Hedra suggests optimized metadata that can boost discoverability across YouTube, social platforms, internal DAMs, or CMSs.

6. Format and Resolution Automation

Need multiple versions of your content? Hedra can automatically export your final output in various formats—MP4, WebM, vertical for Reels, square for Instagram, or 4K for presentations.

7. Audio Cleanup and Normalization

Hedra’s audio pipeline removes background noise, levels speech volume, and syncs voice tracks without extra plugins or editing rounds.

Conclusion

Hedra AI is purpose-built for the modern demands of machine learning teams. Whether you’re training foundation models, deploying real-time inference, or managing multi-cloud infrastructure, Hedra delivers a unified, developer-first experience that simplifies everything.

By removing traditional DevOps barriers and offering powerful orchestration out of the box, Hedra helps startups, enterprises, and research teams build faster, scale smarter, and stay in control of compute costs. If you’re serious about AI infrastructure—without the complexity—Hedra is worth exploring.

Frequently Asked Questions

1. Do I need to manage Kubernetes or containers with Hedra AI?

No. Hedra abstracts the complexity of containers, Kubernetes, and infrastructure provisioning. You can deploy, train, and scale models through a simple UI or CLI.

2. Can I use Hedra with my own cloud account?

Yes. Hedra supports BYOC (Bring Your Own Cloud), including AWS, Azure, and GCP. You can also use Hedra’s managed compute if you prefer a fully hosted setup.

3. Is Hedra only for LLMs or can I use it for other ML models?

Hedra is optimized for large-scale models, including LLMs, diffusion models, and other deep learning workloads. It supports any ML framework like PyTorch, TensorFlow, or JAX.

4. How secure is the platform?

Hedra supports enterprise-grade security including role-based access control, SSO integrations, and compliance with GDPR, HIPAA, and SOC 2 standards.

5. What does pricing look like?

Hedra offers transparent, usage-based pricing with no hidden fees. You only pay for the compute and storage you use—ideal for both early-stage teams and enterprise workloads.